Reading Time: 9 minutes

The past couple of months we at Egeniq had been working on the brand new Android TV app for Pathé Thuis, a video-on-demand service in the Netherlands, and now that it is finally available on Google Play, I wanted to talk about development for Android TV — what I think about it, things I liked and things I didn’t.

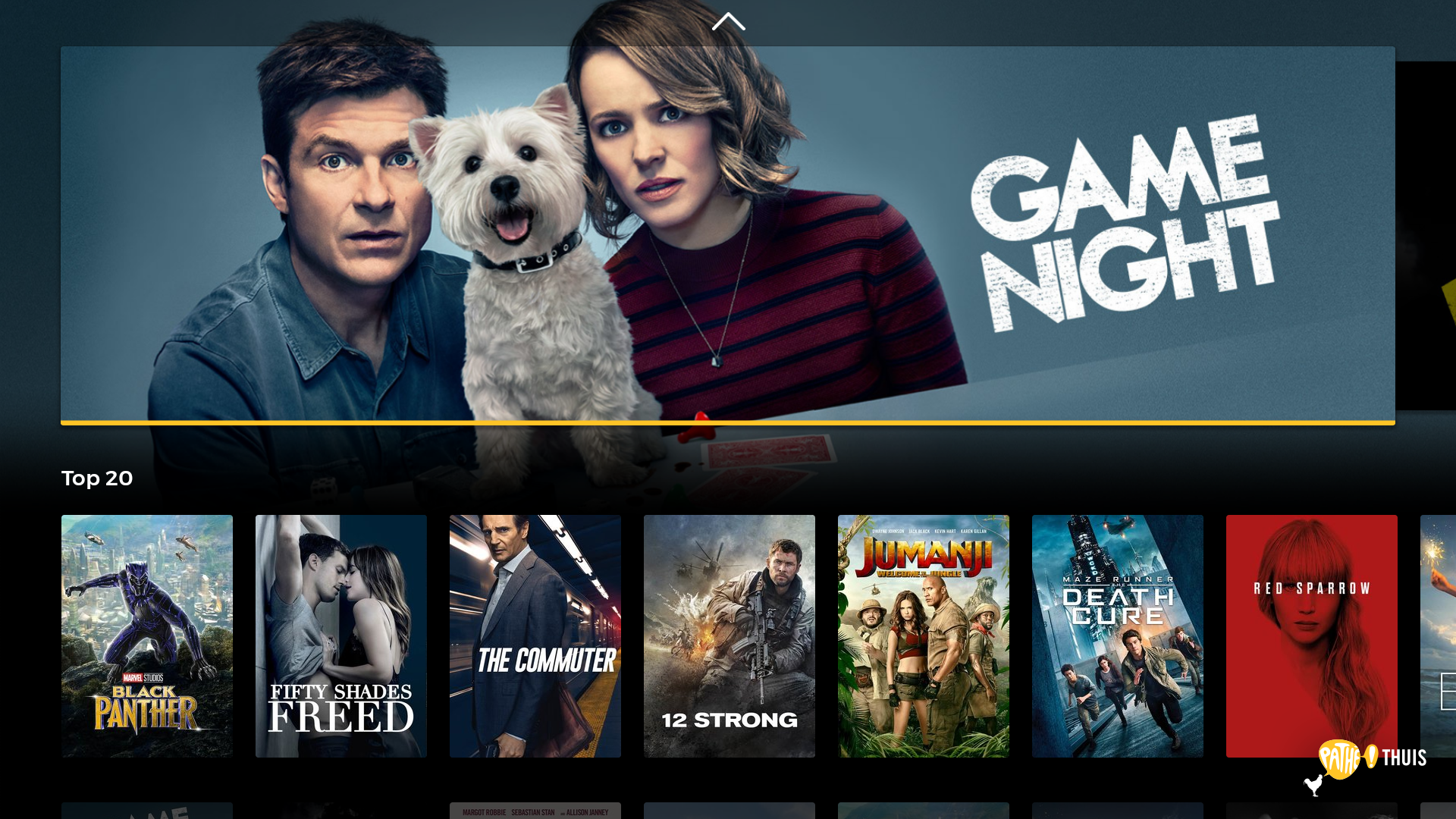

The Pathé Thuis app on Android TV

In my opinion, the best thing about Android TV is that it is the same Android we love on our phones and tablets, with just one big restriction — there’s no touchscreen.

This means that a lot of code can be shared if you have a regular mobile app next to your TV app, just make sure the user can navigate on the screen with the remote or controller.

With this restriction comes the worst part too: in order to make the UI navigatable, you have to keep track of user focus — which button, field, tab, etc. the user has currently selected at any time.

This can be easy in a layout with 2 buttons, but it can be quite a task when you have auto-hiding tabs on the top, a hidden drawer on the left, multiple rows and columns of different type of items in the center.

You have to keep track of what should be focusable and what should not, make sure the direction of navigation is logical, and maybe even change the visibility of elements based on where the user is. So to sum it all up, there’s a lot that can go wrong.

Luckily, Google offers some help in this matter. The Leanback library consists of UI elements, fragments and services which do a lot for you if you want to make something similar to the Leanback showcase app.

For us, the two most important classes it contains are the RowsSupportFragment and VerticalGridSupportFragment.

The former can display a vertical list of horizontal rows, the latter an entire grid of items.

Both of these fragments have an inner RecyclerView, on top of which you have to plug an ArrayObjectAdapter and Presenter (or PresenterSelector, if you have multiple item types).

Leanback works perfectly, if you do things the same as in the showcase app. But if you want to do something more special, you might run into limitations.

In the coming sections I will write about my frustrations and problems I had to face while working with Android TV, so maybe I can help if you if you are in the same place as I was.

Challenge #1: RowsSupportFragment does not remember position inside a row

Although RowsSupportFragment does remember the last selected row when recreating the fragment from the backstack, it does not remember the position in that row (it would select the first one).

When the user selects a movie from one of the recommendation rows, our app opens the detail page of that movie. But when coming back, it should go back to the same row and the same movie in the overview, not a different one.

In my first attempt to solve this, I saved the subposition manually, and restored it as soon as the fragment became restored. However, the selection of the subposition is run asynchronically after the rows have been set up, so what you would see is that it opens with the first item selected for a fraction of a second, then it would jump to the correct item. Our item selection listeners also detected both items, which was not ideal as well.

After a lot of source code inspection and trying, I came up with a custom RowsSupportFragment, which is a replacement for the original one, and handles subposition saving out-of-the-box:

Challenge #2: Navigating to the menu

In our app, we want to show the top menu when the user reaches the top of the page and navigates once again to the top.

For this we followed the example of the showcase, and created a custom layout which contains the menu on the top, and the main view on the bottom. The layout listens to the focus search events, and if it is a search in the top direction which does not succeed, the menu will be shown.

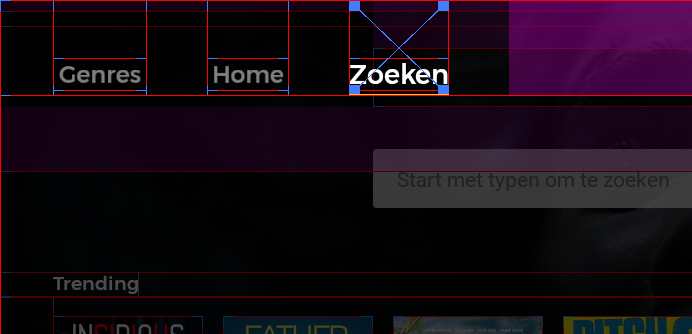

Just make sure that the menu is not focusable when it is hidden, otherwise it will just navigate over to it. For debugging focus issues, I recommend turning on the “Show layout bounds” setting in the developer options on Android 6+, because it also shows the current focus with a big blue X:

If you are using the fragments from the leanback library for displaying your items, you need the inner RecyclerView to allow leaving the focus on top, so your outer custom layout can catch the search event:

supportRowsFragment.verticalGridView.setFocusOutAllowed(true, false)

This works fine with a SupportRowsFragment, but if you try the same with a VerticalGridSupportFragment, it will not work. This is because the grid fragment already has a focus search interceptor, which is used to hide / show a search button on the top (as you may see in the showcase demo).

If you don’t need the search button, but want to receive the focus search event, you have to disable the inner search listener:

Challenge #3: Opening pages and transition of focus

When navigating inside the app, you usually open and close pages as you view items and collections. When you click on a movie for example, the focus from the selected movie jumps over the button in the newly opened detail page.

If you use fragment / activity animations, then the transition of focus can happen too quickly, and the exit of focus happens too quickly, as well as the entry of focus in the new page.

The Leanback ItemAdapter has a so-called FocusHighlightHandler, which by default scales and dims the items depending if they are selected or not.

This works fine if you want exactly the same effect, but if you want something more special, all you can do is disable the highlight handler via the public API.

You can assign a state list animator on the focus event of the item layout, but the issue is that focus is immediately lost when clicking on the item, which makes it always animate to unfocused state when clicked upon.

Our solution to this was to inject our own focus highlight handler, which you can see here:

We use one highlight handler globally in the app, which has an option to delay changes. On an item click we delay all highlight changes by a set time which makes the selection highlight stay, and the animation will not disrupt the page animation.

Instead of the focused property, we handle the selected property of the views, which is set by the handler instance.

Challenge #4: DialogFragment with animations

We make some use of DialogFragment in our app, because it is useful to create modal pages which focus can not escape (an example for this is error dialogs).

DialogFragment is just a wrapper around a dialog, with the fragment view injected inside this dialog. When you pop the fragment, the dialog is dismissed with the view contents.

Because the dialog is immediately dismissed, fragment animations do not work on it, since those require the view to be visible.

So we tried to set the animation on the dialog which is created, but without luck, nothing we did made our dialog animate. So we resorted to a workaround solution: write our own DialogFragment which intercepts the dismiss() call and executes the hide animation first. For the show animation, you can operate on the fragment view in the onViewCreated() method:

This fragment executes the hide animation before the final dismiss. Just make sure you don’t animate it when you open multiple fragments at the same time, or open a new fragment immediately after it, because it will mess with back stack (in this case, call dismissImmediately()).

Challenge #5: Voice input

As you may have experienced, typing letters with a remote control is very time-consuming. That’s why the majority of the apps have voice input enabled for input fields which are frequently used (usually for searching in the app).

If we want to use this feature in our own app, we can use the built-in speech recognizer of Android, which is quite simple to use and free of charge.

The classes you will be interacting with are SpeechRecognizer and RecognizerIntent. First, you have to create a new intent, which contains all the details about how you want to recognize the speech:

What I’ve discovered while integrating voice recognition in the app, is that you have 2 ways to recognize speech:

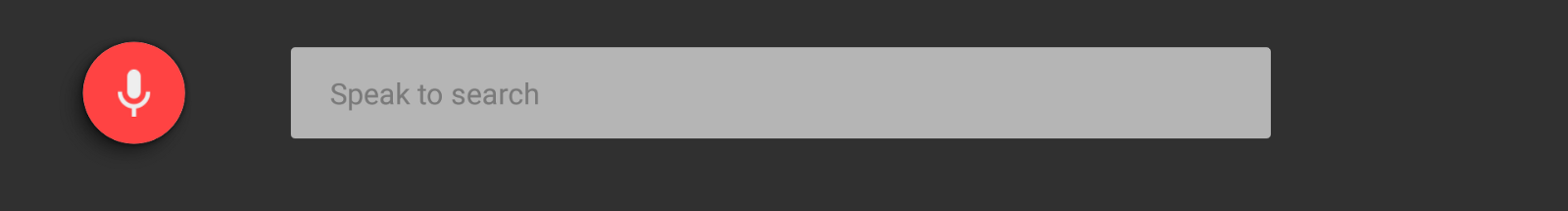

- Use the system overlay: if you start the intent as an activity [startActivityForResult(recognizerIntent, requestCode)], it will open a system overlay on top of your app. The user speaks there, and when it is finished, you get the results back in the activity result. If you use the overlay, you don’t need a permission to record audio, but instead you have to line up your UI perfectly to the one with the overlay, so the transition is seamless to the user.

- In-app voice recognition: if you don’t want the overlay, you can also start recognition by supplying the intent to a SpeechRecognizer instance, by using speechRecognizer.startListening(recognizerIntent). In this case you will need the recording permission, but you can design your own UI around it. You will receive the partial and end results through the callbacks.

We investigated both options, and in the end we went with the system overlay for these reasons:

- On one of our test devices (Mi Box 3) the in-app version would hang quite frequently, as the recognizer would start, do nothing for 10 seconds, and exit with a network error. When using the system overlay, the hangs are a lot more infrequent (but still happened occasionally, also in other apps. From the logs it seemed like the Bluetooth connection with the remote failed).

- Other apps (YouTube, Play Movies) seem to be using this as well. So people using our search function are already familiar with how it works.

- No need for the RECORD_AUDIO permission.

Conclusion

Altogether, I really enjoyed working on my first Android TV application. Because it is treated like an Android device with a huge screen, if you can develop for phones and tablets, you can also develop for TV.

The two things which you have to get to know better are focus handling and the Leanback library. Both can create some confusion, mainly because of the lack of documentation and the way the adapters and presenters are tied together with the special fragments, but on the other hand I am a bit relieved that they give you the tools to create a simple item selection screen relatively easily.

Android TV also gives us the possibility to bring our existing apps to the big screens, while not having to write a completely different HTML app. The TV manufacturers also see the opportunity, more and more TVs are being released with Android on them. For those who don’t want to spend so much on a new TV, there are also set-top boxes, with some of them being quite cheap.

So as a developer and also as a consumer, I welcome the breakthrough Android TV is making on the market, as it is a platform which fits the big screen well, and is also a joy working with.